In the early through mid 2000s, my nerdier friends and I had a reputation for being able to remove malware (back then, we didn’t really call it “malware”) from computers. Save for a few bricked machines due to our novice and woefully incomplete grasp of the command regedit, we would clean the malware off of your computer for $20. If we couldn’t do it, there was no charge.

Back then, it was still common to have desktop computers set up in a computer desk in a “computer room,” or “home office,” illuminated by an overhead boob light and the ethereal glow of the then-ubiquitous CRT monitor.

Revisiting the spyware era

The most difficult software to remove on computers in the 2000s was a flavor of malware called spyware (or “adware”, which is the same thing). The word “spyware” is not often used today because so much software can be classified as spyware in one form or another that the word has almost no meaning. The word was invented by Zone Labs (now Check Point) after a parent noticed an alert from their Zone Labs personal firewall about data being sent back to the Mattel toy company via the children’s edutainment program Reader Rabbit.

The majority of spyware, though, was delivered through a browser. Browser security controls, especially in Internet Explorer, were weak, and the web was still a Wild West of cobbled-together HTML, fan pages, and Flash Player content. If you remember web toolbars, you’ve come to the right era.

Spyware was particularly difficult to remove because the creators of spyware had resources and the financial motivations to continue developing spyware products that were compelling, purported to offer (or did offer) a legitimate service, and would only work effectively if they maintained a deep persistence in the target operating system.

Of the most notorious spyware operators masquerading as a legitimate business was the Claria corporation, which essentially invented behavioral marketing. Claria made a pack-in software called Gator eWallet, included as an optional-but-easy-to-miss install with other software of the time (think Kazaa, which is not something I thought I would be writing about in 2025) that was free, of an otherwise questionable nature, or both. Gator eWallet was an autofill program that captured and used personal data to sell advertisements with very limited user understanding as to how the program actually accomplished this (by displaying copious amounts of targeted and non-targeted advertising in the form of pop-up ads). If you want a more extensive history of the Gator eWallet program and how it worked, I found one written by Ernie Smith for Tedium in 2021. Ernie appears to be quite active on BlueSky (I don’t have a BlueSky).

Of note in Smith’s writeup is that an article decrying Claria/Gator for their practices is still available online through PCMatic, even in 2025.

Gator, like most Windows applications, had an uninstall capability baked into the Control Panel, but in practice the uninstall function did not work, or it only worked until the browser was re-launched and would be reinstalled, or would be installed again through some other vector. In any event, artifacts of Gator persisted deeply in Windows XP, and they remained in operation until overwhelming negative consumer sentiment killed the company despite a couple of failed rebrands.

But it’s OK now…right?

Unfortunately, the negative consumer sentiment that killed Claria and made spyware an untenable business model did not persist. Whether by intent or not, and I suspect the former, tech companies have continued their slow and insidious war against individual privacy for nearly 30 years, and they’re winning.

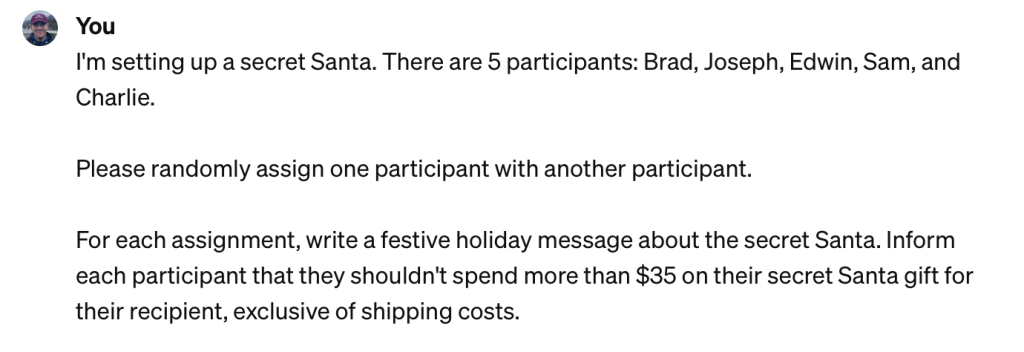

Gator was the first software I thought of when I read an article from TechCrunch about the upcoming Perplexity browser, called Comet: “Perplexity CEO says its browser will track everything users do online to sell ‘hyper personalized’ ads.” To quote Julie Bort from TechCrunch:

“CEO Aravind Srinivas said this week on the TBPN podcast that one reason Perplexity is building its own browser is to collect data on everything users do outside of its own app. This so it can sell premium ads.”

And:

“Srinivas believes that Perplexity’s browser users will be fine with such tracking because the ads should be more relevant to them.”

In case it wasn’t already clear what I was getting at here: you, the user, should not be fine with this. Nor should you be fine with half-baked features like Recall, a Microsoft service that will “help you” remember your computer activities but will almost assuredly be used at some point to sell advertisements at some point.

The takes I read on this thing from the webosphere are astounding. The prevailing theory is one of ambivalence. There is also a sense of fatalist defeatism, all the way to one commenter saying “well, Elon has all of the data anyway,” and other insidious variants of the old “I have nothing to hide” argument.

Y’all, this is not OK.

One of the most compelling papers I have read about the subject of privacy is not from the cybersecurity space directly, but from law. The 2007 paper is by Daniel Solove, a then-student and now-professor at The George Washington University School of Law, and is called “‘I’ve Got Nothing to Hide’ and Other Misunderstandings of Privacy.” Solove decomposes the argument in plain language, and I highly recommend reading the paper in its entirety. Solove approaches the subject from the context of government surveillance – 2007 was the apex of discourse around the NSA PRISM program – but the current state of advertising tech applies wholesale, and I don’t figure Solove predicted (or could have predicted) the speed and scale of the erosion of digital privacy through 2025.

One of Solove’s more curious conclusions is that privacy is actually poorly defined:

“Ultimately, any attempt to locate a common core to the manifold things we file under the rubric of “privacy” faces a difficult dilemma.”

And lands here:

“The term privacy is best used as a shorthand umbrella term for a related web of things.”

And finally:

“In many instances, privacy is threatened not by singular egregious acts, but by a slow series of relatively minor acts which gradually begin to add up.”

One of the cruxes of Solove’s argument is you need not be acutely injured to be a victim of the erosion of privacy. The rise and fall of spyware as a “legitimate” service shows that people did in fact perceive injury at both their erosion of privacy, a poor understanding of how their privacy was being invaded, and the constant interruption by pop-up ads. We have since come to accept the structural decline of privacy in exchange for being, ourselves, products to advertisers. Death by a thousand platforms.

How did this happen? We focused too narrowly on the quality of service we get for “free” in exchange for giving up privacy, and fell into the same trap of systematic and systemic myopia that fuels the “I’ve got nothing to hide” argument and (by extension) facilitates the continued enshittification of the web.

On top of all this is a practical consideration: in exchange for serving you “hyper-personalized ads,” what benefit does the Comet browser actually offer you?

For a social media platform, the tradeoff is pretty obvious. For Comet, the benefits aren’t really all that clear. It markets itself extensively as an AI-powered browser. I don’t really know anyone who is interested in an AI-powered browser, and don’t really understand why companies feel a need to bake AI into anything and everything from the bottom up. But I know what I do not want, and that is “hyper personalized ads.” If I want to use AI today, I can just go to ChatGPT, and ChatGPT does not serve ads (today).

Tech money strikes again

So here is where we get to the thing in the podcast with Perplexity’s CEO, Aravind Srinivas, that spawned the TechCrunch article. Buckle up:

“People like using AI, they think it’s giving them something Google doesn’t offer.”

Yes, the “something” is “information quickly without ads.”

“We want to get data even outside the app to better understand you, because some of the prompts that people do in these AIs is purely work related. It’s not, like, that personal. On the other hand, like, what are things you’re buying? Which hotels are you going…which restaurants are you going to? What are you spending time browsing – tells us so much more about you that we plan to use all the context to build a better user profile…and show some ads there.”

Look. I’m no CEO. I’m not trying to pick on this guy. He’s successful and has a business. I do not. I’m just some fucko on the internet. I get it.

But essentially what he is saying is this: “it’s a problem that people are able to use AI without ads, and we need to solve that problem by showing them ads, and the only way we will get them to pay attention to the ads is by spying on them extensively.”

That is the most big tech-ass big tech shit I have ever heard. I am sure someone in the boardroom at VC capital whatever came up with this, and everyone just thought it was a great idea and just decided to go with it. It has really nothing to do with providing a good user experience and everything to do with making money.

In conclusion

The point I am trying to make is just to consider that we have seen and continue to see an erosion of privacy beyond a fatalist and narrow scope like “well, they have my data anyway,” or “well, I have nothing to hide.” OK, so stop giving it to them, and “having nothing to hide” is 1) not true and 2) not exactly the point.

I don’t really know how else I can illustrate the extent to which advertising tech creates opportunities for advertisers by using you as the product. I’m not going to suggest that every one of these tradeoffs is not worth it – sometimes it is. If you are getting real value out of a free social service, that’s fine, all I ask is that you zoom out a little bit to understand the context, value, and nautre of the data you are generating for them.