I ran a secret Santa for my distributed friend group this year. It being 2023 and all, I used ChatGPT to do the Santa assignments and generate some creative secret Santa messages, of which it did an admirable job. As usual, more detail is better when it comes to that thing.

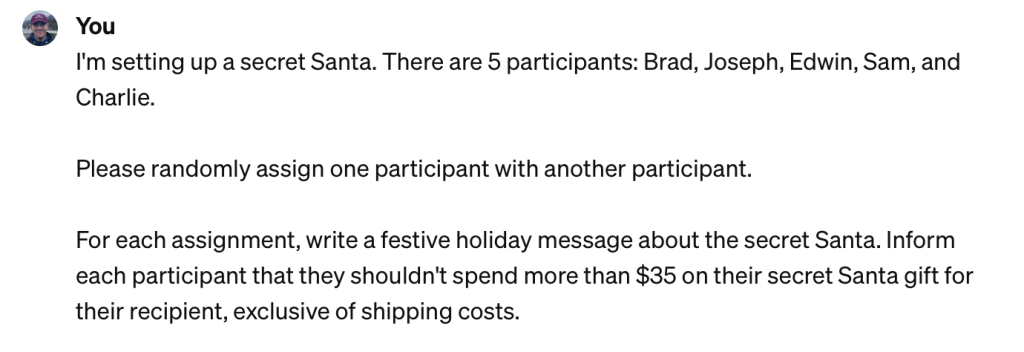

Here was my prompt:

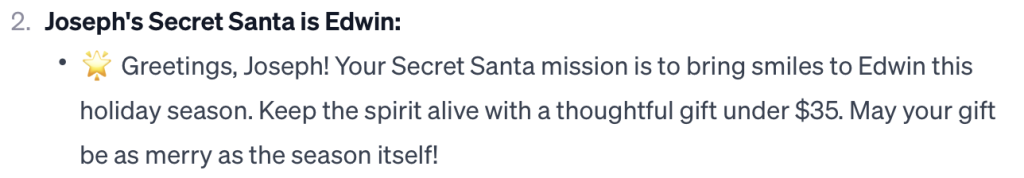

Here is one of the results:

Unfortunately as the organizer, doing these things comes with the major drawback of “well, I’m the organizer, so I know who everyone is sending a gift to,” making it only a secret-ish Santa.

There are web-based services that do exactly what I was trying to do with this project, but since none of them have the prerequisite of being a huge nerd, I decided to home-grow my own solution using Python and Ollama, Meta’s free large language model.

I also figured I’d throw some encryption in the mix so the message would actually be a secret, not just to the Santa participants, but to the organizer. So I slapped it together and called it “Secreter Santa.”

If you want to try this thing, you need to be running Python 3, and a recent version, because there is some reliance on the correct ordering/index of lists. That’s something I can change, but this was just a fun thing and a proof of concept. You also need to run Ollama, and there are some libraries you need to import to be able to send the prompt to the LLM and do the cryptography.

Ollama is totally free, and you can download it at https://ollama.ai – it is not as good as OpenAI at handling prompting, which leads to some weird results. I’ll get into that later.

I used a hybrid encryption scheme to do this, since the return from the LLM prompt is an arbitrary length, and you can’t encrypt data with RSA that is longer than the key itself.

How it works:

- Organizer collects the RSA public keys from each participant. There are a fair number of free tools online you can use to generate keys. I wouldn’t use them for production, but for testing they can help.

- The program runs and prompts for the name of each participant, and their RSA public key, which can be pasted in, which is sent to a

dict. - The program uses the

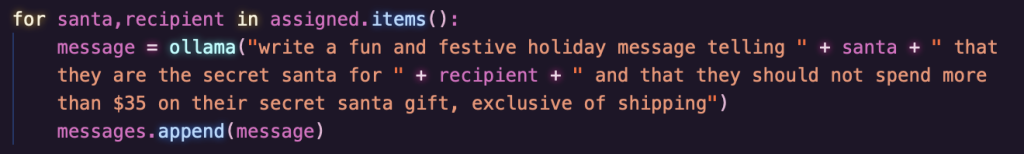

random.shuffle()method to assign the participants to each other. - The program sends a prompt to Ollama, which is assumed to be listening on its default port:

- The program generates a random 16-character AES key for each participant and uses the key to encrypt the message based on the prompt. The encrypted message is written to a file.

- The program takes the public RSA key for each participant and encrypts their corresponding AES key.

- The encrypted AES key is printed to console.

Once the organizer sends both the encrypted message file and the encrypted key to each recipient, they can run the “Unsecret Santa” program to decrypt and display the contents.

Unsecret Santa prompts the user for their message file, their .pem (RSA private key) file, and the encrypted key. It does the work for you from there and displays the message.

So, from a pure security perspective, there are some holes here, but it’s still interesting – unless you’re yanking the unencrypted message out of memory before the encryption step, there’s no way to attribute the message to any author, because it wasn’t written by an author, and the sender of the message has no idea as to the message’s contents. There is some element of digital signing that could happen here too, but let’s not get too far ahead.

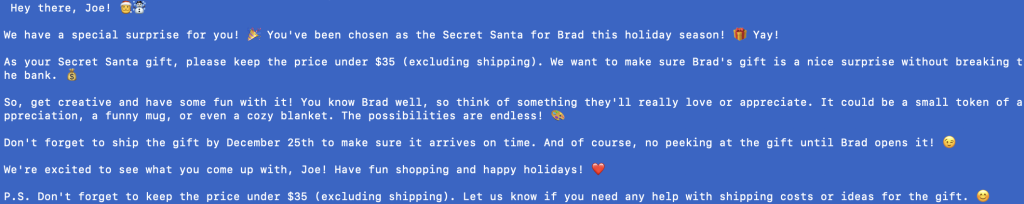

Anyway, this is where I ran into some limitations of Ollama, where it is just a little too eager to offer its…guidance on things it wasn’t prompted to offer them for.

This result was pretty good but it’s weird to me that it offered specific suggestions as to the gift without being prompted to do so, which is not something I ever experienced using ChatGPT.

In another return, the prompt offered “a hint” that the recipient “loved coffee” and specifically asked their Santa to order their recipient coffee for their gift.

The results of the prompt varied pretty wildly in weird and sometimes funny ways. Some of them include lots of Instagram-worthy hashtags, some are quite formal in nature, and others are only a couple of curt sentences. I recently saw a comparison chart of large language models making the rounds on LinkedIn, and I can’t lend that too much credibility (because LinkedIn) but it did have Ollama at the bottom.

Still, ya can’t beat the price.